#WEB SCRAPER CLICK BUTTON HOW TO#

Now that we know how to fetch one quote, let's trick our code a bit to get all the quotes and extract their data one by one. Now, let's expand it and fetch all the current page quotes. We did it! Our first scraped element is here, right in the terminal. Output of our script after running node index.js

npm init -y Initialize the package.json file using the npm init commandĪfter typing this command, you should find this package.json file in your repository tree. It's helpful to add information to the repository and NPM packages, such as the Puppeteer library. Now, it's time to initialize your Node.js repository with a package.json file. mkdir first-puppeteer-scraper-example Create a new project folder using mkdir It'll contain the code of our future scraper. While MS Office does have support for Mac, it is way harder to write a working VBA scraper on it.

#WEB SCRAPER CLICK BUTTON WINDOWS#

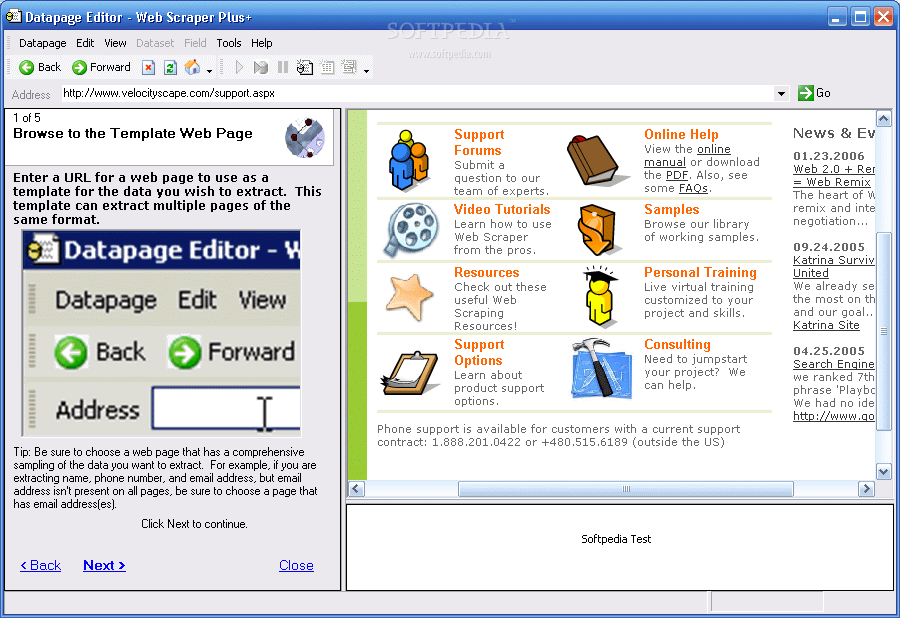

Cons Only works in Windows VBA scrapers are not cross-platform. New project.new folder! First, create the first-puppeteer-scraper-example folder on your computer. Everything will be taken care of by the VBA script including log-in, scrolling, button clicks, etc. Let's dive in! 🤿 How to Initialize Your First Puppeteer Scraper Web Scraping - Filling in Forms My Online Training Hub. It'll improve your learning and understanding of the topic. In order to click on elements on a web page like links/buttons youll need to use something. Prerequisitesīefore diving in and scraping our first page together using JavaScript, Node.js, and the HTML DOM, I'd recommend having a basic understanding of these technologies. I structured the writing to show you some basics of fetching information on a website and clicking a button (for example, moving to the next page).Īt the end of this introduction, I'll recommend ways to practice and learn more by improving the project we just created. I'll introduce the basics of web scraping in JavaScript and Node.js using Puppeteer in this article. For simple data extraction, you can use Axios to fetch an API responses or a website HTML.īut if you're looking to do more advanced tasks including automations, you'll need libraries such as Puppeteer, Cheerio, or Nightmare (don't worry the name is nightmare, but it's not that bad to use 😆). JavaScript and Node.js offers various libraries that make web scraping easier. Always make sure you're scraping sites that allow it, and performing this activity within ethical and legal limits. Disclaimer: Be careful when doing web scraping. Using scripts, we can extract the data we need from a website for various purposes, such as creating databases, doing some analytics, and even more. It’s pretty easy to use and set up as well.

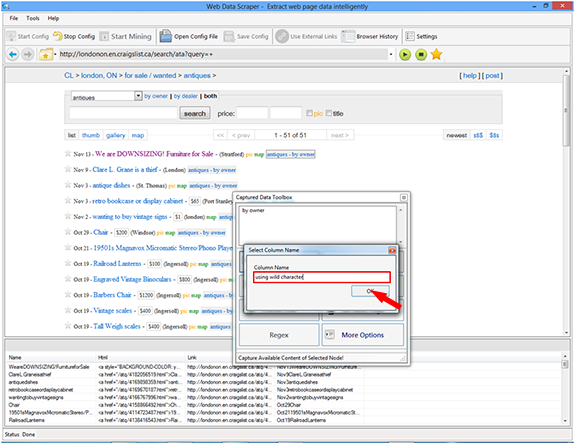

This Chrome extension runs entirely out of your browser, and it is surprisingly powerful for just an extension. By the way,how are all these inner fields called? I believe part of my problem arises from the lack of html code understanding.Welcome to the world of web scraping! Have you ever needed data from a website but found it hard to access it in a structured format? This is where web scraping comes in. If you’re looking for a no-code web scraping program, then look no further than Agenty. My code was: button = inspected the button code and I would instead like to use data-vertical="PEOPLE" or any other of this unique fields (the tag button is not enough since there are many buttons on Linkedin site). Again I was using the xpath but it changes after every login. My second point is 2) how to click a button. To input the text I was using the xpath (and it works) but it changes every time I login into the site: search = could I use instead part of the input bar code above so that I can rerun my script multiple times?

I was doing some web scraping with python (Linkedin site) and got stuck with the following 2 issues: 1) How do I input text on a search bar? 2) How to click a button? First, this is the search bar code:

0 kommentar(er)

0 kommentar(er)